UnScene3D: Unsupervised 3D Instance Segmentation for Indoor Scenes

1Technical University of Munich 2NVIDIA

We propose UnScene3D, the first fully unsupervised 3D learning approach for class-agnostic 3D instance segmentation of indoor scans. UnScene3D first generates pseudo masks by leveraging self-supervised color and geometry features to find potential object regions. We operate on a basis of geometric oversegmentation, enabling efficient representation and learning on high-resolution 3D data. The coarse proposals are then refined through self-training our model on its predictions. Our approach improves over state-of-the-art unsupervised 3D instance segmentation methods by more than 300% Average Precision score, demonstrating effective instance segmentation even in challenging, cluttered 3D scenes.

Video

Generation of Pseudo Masks

First, we utilize self-supervised pretrained features both from the 2D and 3D domains, and aggregate these fetures on a geometric oversegmentation of the scenes. The combination of deep features from pretrained models and the geometric oversegmentation enables us to efficiently represent both low- and high level properties of the scene. From here, we follow a greedy approach to iteratively separate foreground-backgorund partitions based on the segment features and using the Normalized Cut algorithm. As it can been seen in the animation after every iteration we mask out the already predicted segment features and continue the process until we reach the desired number of segments or there are no more segments left in the scene.

Self-Training Cycles for Refinement

While the pseudo masks can already provide a good set of initial proposals, we further refine them by training our model on its predictions. This self-training proccess can effectively densify and clean the orignally sparse pseudo masks, resulting in a more accurate instance segmentation.

After every training iteration we generate a new set of predictions and combine them with the initial mask to create a new, enriched version of pseudo training masks. We show the results of our self-training cycles on the ScanNet dataset. The first row shows the initial pseudo masks, while the second and third row shows the self-training stages after a single training iteration and 3 iterations accordingly.

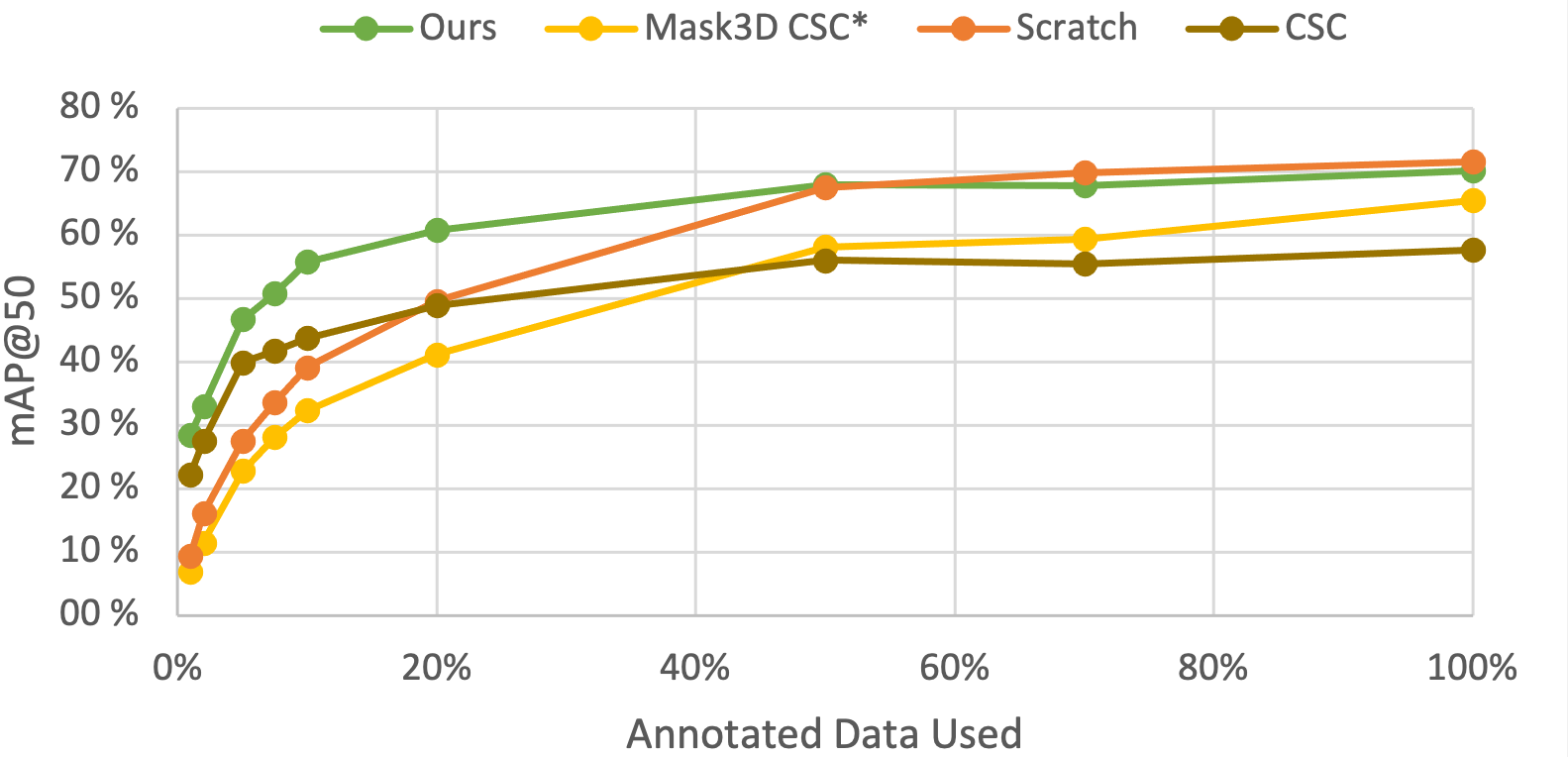

UnScene3D as Pretraining for Data Efficient Scenarios

UnScene3D is able to learn powerful class-agnostic object properties, which can be used for downstream tasks such as data efficient dense 3D instance segmentation. With our aproach we are able to improve over previous state-of-the-art self-supervised pretraining method for limited data scenarios.

Fully Unsupervised Instance Segmentations

Input Meshes | Unsupervised Predictions | GT Instances |

| | | |

| | | |

| | | |

Acknowledgments

We want to acknowledge concurrent methods in the 2D domain, which have inspired our work for a two stage instance segmentation pipeline of fully unsupervised scenarios. FreeSOLO and CutLER both follow the approach to first generate a noisy and sparse set of pseudo annotations, then follow an iterative self-training strategy to improve the quality of these masks. Moreover, CutLER also uses a modification of the Normalized Cut algorithm to generate the initial pseudo annotations.Additionally we also want to thank the authors of Mask3D for their powerful backbone architecture, which we use in our work as the self-trained model.

BibTeX

@inproceedings{rozenberszki2024unscene3d,

title={UnScene3D: Unsupervised 3D Instance Segmentation for Indoor Scenes},

author={Rozenberszki, David and Litany, Or and Dai, Angela},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}